Chatting with Odoo: A Simple Way to Get Answers with AI

If you’ve ever tried to pull quick answers from Odoo ERP, you know it can be clunky. Even basic questions like “What’s our billable utilization this week?” mean jumping across reports or writing custom queries.

We wanted to fix that by letting anyone just ask a question in plain English and get an answer instantly. Thanks to modern AI tools and frameworks like the Model Context Protocol (MCP), we built a system where an LLM does the heavy lifting behind the scenes.

Here’s a quick overview of how it works.

How It Works

The big idea:

When a user asks a question, the AI figures out:

What data it needs

Which Odoo models to query

How to combine everything into a clear answer

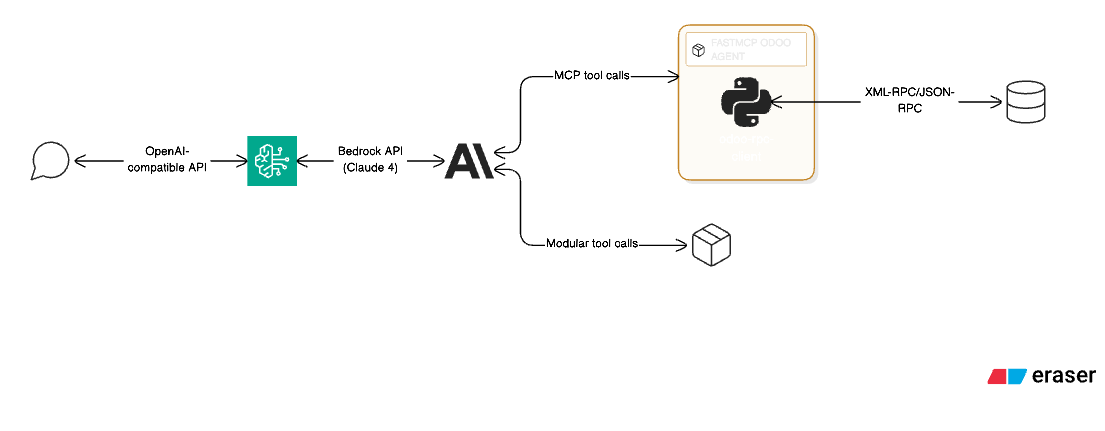

Under the hood, here’s what ties it all together:

FastMCP – Our orchestration layer that defines “tools” (functions) to get Odoo data.

Odoo RPC Client – Talks to Odoo’s API to pull records.

Open WebUI – A chat interface where people type their questions.

Bedrock Access Gateway – Connects Open WebUI to Claude 4 securely.

Claude 4 – The large language model that plans, fetches, and explains.

Example in Action

Let’s say someone types:

“How much AWS funding is left for Client XYZ?”

Claude breaks this into steps:

Fetch the allocated AWS budget.

Sum expenses tagged to AWS.

Subtract used from total.

Return the answer in plain English.

Instead of building a report or writing SQL, it’s just a quick chat.

Architecture Diagram

Why This Approach Works

No more manual reporting – Users just ask questions.

Modular tools – You can add new queries as needed.

Standardized protocol – MCP keeps everything clean and predictable.

Secure – All data stays in your AWS environment.

What’s Next

Right now, our setup is tailored to our own Odoo models, but the idea is broadly applicable. Over time, we’d like to share a template others can adapt. If you’re thinking about doing something similar, we’re happy to compare notes.